Everything we use – from smartphones to laptops- is now smaller and lighter, but guarantees better performance.

Consider it a generic explanation for performance or improvements in any process and more suitable for hardware related processes.

Those who have been involved in machine learning and deep learning project would know that: whether it is a decision model or a detection model, the real-time application is crucial.

One of the key problems of any real-time, performance-oriented application is the processing power needed to fulfil its functionality. Generally, we depend on high potential cloud GPUs or local GPU servers to run these models in real-time.

However, the situation has changed. Our processing devices have improved in micro-level and become handier; simultaneously, the machine learning libraries evolved a lot, bringing big tasks into small scale devices like mobile phones and embedded devices.

TensorFlow Lite

TensorFlow Lite is one of the examples of the aforementioned machine learning library. It is a pack of tools that helps developers run TensorFlow models on embedded, mobile and IoT devices.

TensorFlow Lite lets devices embrace machine learning easily “at the edge” of the network without sending data back and forth from a server.

Performing machine learning on-device can help developers to improve:

-

-

- Latency: there’s no round-trip to a server

- Privacy: no data needs to leave the device

- Connectivity: an Internet connection isn’t required

- Power consumption: network connections are power-hungry

-

Development workflow

The TensorFlow Lite workflow contains the following steps:

1. Pick a model

You have many options to choose a model; develop your own TensorFlow model, rely on pre-trained models or get a new model online.

2. Convert the model

If you’re using a custom model, use the TensorFlow Lite converter and a few lines of Python to convert it into the TensorFlow Lite format.

3. Deploy to your device

Tensor Flow Lite interpreter can run your model on-device with APIs in multiple languages.

4. Optimise your model

Use our Model Optimisation Toolkit to reduce your model’s size and increase its efficiency with minimal impact on accuracy.

TensorFlow Lite for Microcontrollers

TensorFlow Lite for Microcontrollers is a port of TensorFlow Lite designed to run machine learning models on microcontrollers and other devices with lesser memory bandwidth.

It doesn’t require operating system support, any standard C or C++ libraries, or dynamic memory allocation. The core runtime fits in 16 KB on an Arm Cortex M3, and with enough operators to run a speech keyword detection model, it takes up a total of 22 KB.

Why are Microcontrollers Important?

Microcontrollers are typically small, low-powered computing devices often embedded within hardware that requires basic computation, including household appliances and Internet of Things devices. Billions of microcontrollers are manufactured each year.

Microcontrollers are often optimised for low energy consumption and small size, at the cost of reduced processing power, memory, and storage. Some microcontrollers have features designed to optimise performance on machine learning tasks.

With the ability to run machine learning models on small scale embedded microcontroller-based devices, we can add AI to a vast range of hardware devices without relying on network connectivity and costly processing units that are often subject to bandwidth and power constraints and high latency.

At the same time, running inference on-device can also help preserve privacy since no data leaves the device.

How it Transforms Your Business?

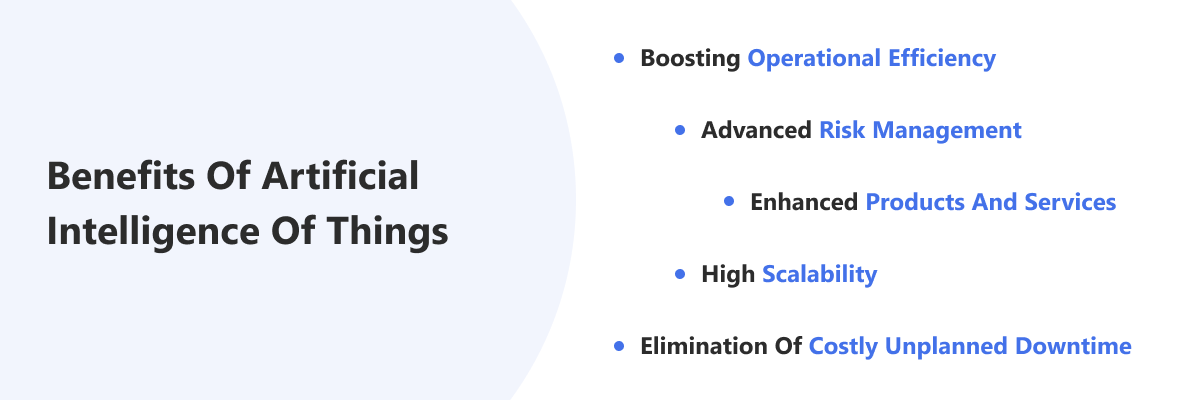

As the technology improves, we try to push the solution to its optimum performance with a lesser expense so we can look into a wider band of customers. The edge device processing came in since automation and IoT became a crucial part of major industries.

People tend to reduce manual labour for better performance and time utilisation. So, providing AI and machine learning solutions in a small scale edge device has become a high demand tech stack in the current industry.

Here is how industries are leveraging the possibilities of AI and machine learning:

-

-

- Self-driving and robotic applications.

- 90 % of the consumer products are leveraging AI on edge.

- Decrease in the form factor of microcontrollers and increasing core processing capability lead to more market possibility for edge deployments.

- In computer vision industry itself 20-30 % prefers to edge solution.

- Intel and Nvidia are pushing new embedded GPU, and TPU devices in market.

- 95 % of the smart media application gadgets are capable of running AI on edge.

- Increase the Data security by holding it in on-premise.

-

Also Read: How Machine Learning Can Help Solving Business Problems

Developer Workflow

To deploy a TensorFlow model to a microcontroller, you will need to follow this process:

-

-

- Create or obtain a TensorFlow model: The model should be compatible with your target device in terms of size after conversion. Moreover, it can only work with supported operations.

- Convert the model to a TensorFlow Lite FlatBuffer: The second step is to convert the TensorFlow model into the standard TensorFlow Lite format using the TensorFlow Lite converter. You may wish to output a quantised model since these are smaller in size and more efficient to execute.

- Convert the FlatBuffer to a C byte array: Flat buffer models are further quantised and kept in read-only program memory, which is provided in the form of a simple C file. Standard tools can be used to convert the FlatBuffer into a C array.

- Integrate the TensorFlow Lite for Microcontrollers C++ library: Write your microcontroller code to collect data, perform inference using the C++ library, and make use of the results.

- Deploy to your device: Build and deploy the program to your device.

-

Limitations

TensorFlow Lite for Microcontrollers comes with all the specific constraints of microcontroller development. If you are working on Linux based single board computing devices (for example, an embedded Linux device like the Raspberry Pi), the standard TensorFlow Lite framework might be easier to integrate.

The following limitations should be considered:

-

-

- Support for a limited subset of TensorFlow operations

- Support for a limited set of devices

- Low-level C++ API requiring manual memory management

- Training is not supported

-

Conclusion

As the hardware and machine learning methods become more sophisticated, more complex parameters can be monitored and analysed by edge devices.

Due to the limited memory and computation resources of the edge devices pipeline, the training process can be done on the high-end GPU machines and converted it and deployed in edge devices.

We believe that further advances on the hardware devices and the evolution of the ML algorithms will bring innovations to many industries and will truly demonstrate the transformational power of edge-based machine learning solutions.